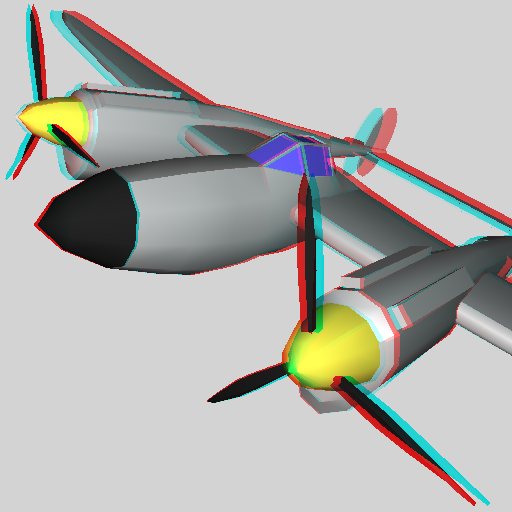

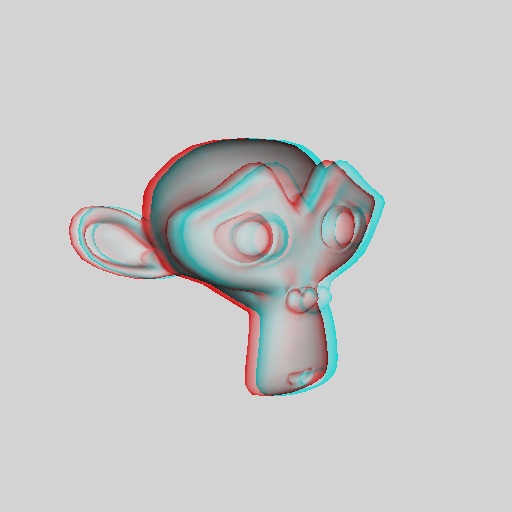

This tutorial demonstrates how simple it is to setup a stereo rendering using red/cyan anaglyphs with Visualization Library.

|

|

[From App_Stereo.cpp]

#include "BaseDemo.hpp"

#include <vlGraphics/GeometryPrimitives.hpp>

#include <vlGraphics/Light.hpp>

#include <vlGraphics/RenderingTree.hpp>

#include <vlGraphics/Rendering.hpp>

#include <vlGraphics/StereoCamera.hpp>

using namespace vl;

class App_Stereo: public BaseDemo

{

public:

virtual String appletInfo()

{

return BaseDemo::appletInfo();

}

void updateScene()

{

/* update the left and right cameras to reflect the movement of the mono camera */

mStereoCamera->updateLeftRightCameras();

/* animate the rotating spheres */

mRootTransform->setLocalMatrix( mat4::getRotation( Time::currentTime() * 45, 0,1,0 ) );

mRootTransform->computeWorldMatrixRecursive();

}

void initEvent()

{

Log::notify(appletInfo());

/* save for later */

OpenGLContext* gl_context = rendering()->as<Rendering>()->renderer()->framebuffer()->openglContext();

/* let the two left and right cameras follow the mono camera */

mStereoCamera = new StereoCamera;

mStereoCamera->setMonoCamera(mMonoCamera.get());

/* install two renderings, one for the left eye and one for the right */

mLeftRendering = new Rendering;

mRightRendering = new Rendering;

mMainRendering = new RenderingTree;

mMainRendering->subRenderings()->push_back(mLeftRendering.get());

mMainRendering->subRenderings()->push_back(mRightRendering.get());

setRendering(mMainRendering.get());

/* let the left and right scene managers share the same scene */

mLeftRendering->sceneManagers()->push_back(sceneManager());

mRightRendering->sceneManagers()->push_back(sceneManager());

/* let the left and right rendering write on the same framebuffer */

mLeftRendering->renderer()->setFramebuffer(gl_context->framebuffer());

mRightRendering->renderer()->setFramebuffer(gl_context->framebuffer());

/* set left/right cameras to the cameras of the left and right rendering,

the viewport will be automatically taken from the mono camera. */

mStereoCamera->setLeftCamera(mLeftRendering->camera());

mStereoCamera->setRightCamera(mRightRendering->camera());

/* set adequate eye separation and convergence */

mStereoCamera->setConvergence(20);

mStereoCamera->setEyeSeparation(1);

/* setup color masks for red (left) / cyan (right) glasses */

mLeftRendering->renderer()->overriddenDefaultRenderStates().push_back(RenderStateSlot(new ColorMask(false, true, true),-1));

/* for the right we set the clear flags to clear only the depth buffer, not the color buffer */

mRightRendering->renderer()->overriddenDefaultRenderStates().push_back(RenderStateSlot(new ColorMask(true, false, false),-1));

mRightRendering->renderer()->setClearFlags(CF_CLEAR_DEPTH);

/* let the trackball rotate the mono camera */

trackball()->setCamera(mMonoCamera.get());

trackball()->setTransform(NULL);

/* populate the scene */

setupScene();

}

// populates the scene

void setupScene()

{

enables->enable(EN_DEPTH_TEST);

enables->enable(EN_LIGHTING);

mRootTransform = new Transform;

// central sphere

sphere->computeNormals();

// rotating spheres

float count = 10;

for(size_t i=0; i<count; ++i)

{

satellite->computeNormals();

child_transform->setLocalMatrix( mat4::getRotation(360.0f * (i/count), 0,1,0) );

}

}

void resizeEvent(int w, int h)

{

/* update the viewport of the main camera */

mMonoCamera->viewport()->setWidth ( w );

mMonoCamera->viewport()->setHeight( h );

/* update the left and right cameras since the viewport has changed */

mStereoCamera->updateLeftRightCameras();

}

void loadModel(const std::vector<String>& files)

{

// resets the scene

sceneManager()->tree()->actors()->clear();

for(unsigned int ifile=0; ifile<files.size(); ++ifile)

{

{

Log::error("No data found.\n");

continue;

}

std::vector< ref<Actor> > actors;

for(unsigned iact=0; iact<actors.size(); ++iact)

{

ref<Actor> actor = actors[iact].get();

// define a reasonable Shader

// add the actor to the scene

sceneManager()->tree()->addActor( actor.get() );

}

}

// position the camera to nicely see the objects in the scene

trackball()->adjustView( sceneManager(), vec3(0,0,1)/*direction*/, vec3(0,1,0)/*up*/, 1.0f/*bias*/ );

/* try to adjust the convergence and eye separation to reasonable values */

sceneManager()->computeBounds();

real convergence = sceneManager()->boundingSphere().radius() / 2;

real eye_separation = convergence/20;

mStereoCamera->setConvergence(convergence);

mStereoCamera->setEyeSeparation(eye_separation);

}

// laod the files dropped in the window

void fileDroppedEvent(const std::vector<String>& files) { loadModel(files); }

protected:

ref<RenderingTree> mMainRendering;

ref<Rendering> mLeftRendering;

ref<Rendering> mRightRendering;

ref<Rendering> mCompositingRendering;

ref<Camera> mMonoCamera;

ref<StereoCamera> mStereoCamera;

ref<Transform> mRootTransform;

};

// Have fun!